Demand for serverless technologies is on the rise because it provides the opportunity for faster time to-market, by dynamically and automatically allocating compute and memory based on user requests. It also provides cost savings through hands-off infrastructure management, which enables companies to redirect IT budget and human capital from operations to innovation.

Onboarding new data or building new analytics pipelines in traditional analytics architectures typically requires extensive coordination across business, data engineering, and data science and analytics teams to first negotiate requirements, schema, infrastructure capacity needs, and workload management.

For a large number of use cases today however, business users, data scientists, and analysts are demanding easy, frictionless, self-service options to build end-to-end data pipelines because it’s hard and inefficient to predefine constantly changing schemas and spend time negotiating capacity slots on shared infrastructure. The exploratory nature of machine learning (ML) and many analytics tasks means you need to rapidly ingest new datasets and clean, normalize, and feature engineer them without worrying about operational overhead when you have to think about the infrastructure that runs data pipelines.

In this article, we will talk about the serverless framework which was developed for one our clients. This framework was developed to enable data science team to rapidly transform data and make inferences using machine learning models without any operational/development overhead.

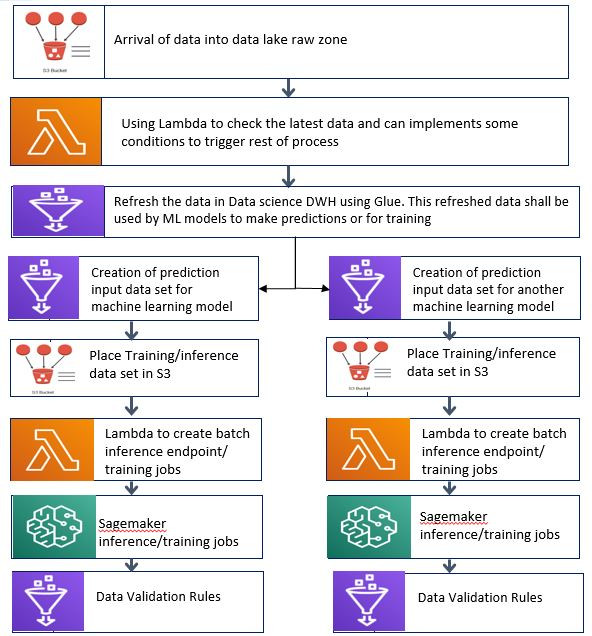

How it Works : Automating machine learning workflows helps to build repeatable and reproducible machine learning models. It is a key step of in putting machine learning projects in production as we want to make sure our models are up-to-date and performant on new data.

Amazon Glue, Amazon Sage Maker and AWS Lambdas can help automate machine learning workflows from data processing to model deployment in a managed environment.

AWS Services & Programming Languages used in this Framework

AWS Glue is a fully managed extract, transform, and load (ETL) service that makes it easy for customers to prepare and load their data for analytics.

Amazon SageMaker is a fully managed service that enables data scientists to build, train, tune, and deploy machine learning models at any scale. This service provides a powerful and scalable compute environment that is also easy to use.

AWS Lambda is a serverless compute service that lets you run code without provisioning or managing servers, creating workload-aware cluster scaling logic, maintaining event integrations, or managing runtimes.

Amazon S3 Event Notifications feature to receive notifications when certain events happen in your S3 bucket.

Amazon Simple Storage Service (Amazon S3) is an object storage service that offers industryleading scalability, data availability, security, and performance.

AWS CloudFormation gives an easy way to model a collection of related AWS and third-party resources, provision them quickly and consistently, and manage them throughout their lifecycles, by treating infrastructure as code.

Python is an interpreted high-level general-purpose programming language. Its design philosophy emphasizes code readability with its use of significant indentation

Apache Spark : a unified analytics engine for large-scale data processing.

Building Blocks

Framework Design :

Below are some of the design considerations made while developing this framework:

1) An AWS Glue job encapsulates a script that connects to your source data, processes it, and then writes it out to your data target. PySpark Code has been used to Develop Glue ETL Logic. A Spark job is run in an Apache Spark environment managed by AWS Glue. It processes data in batches.

2) There is a CSV files which is placed in AWS S3 and it controls the flow of Glue ETL. This control file will point to the SQL queries which will do transformations and shall be executed in a sequence mentioned in the control file. It will also have other details like target paths to save the data of each SQL query.

3) All the SQL queries are stored in a python file and placed in S3 location. The path to this file is provided in referenced files path of glue job. There are some user defined functions which shall execute these queries in Athena and these are also provided in referenced files path of glue job.

4) This framework can be used a common framework for any ETL work flow. The only change which user needs to do is to download the control file and sql queries file from S3 and customise them according to the needs. The core ETL logic will refer to these files during run time.

5) Glue Core ETL Logic takes care of notifications and logging aspects of the job.

6) This framework can scale out in case if you want to run multiple jobs in parallel.

7) Lambda function shall be triggered only on successful completion of glue jobs and the lambda function will have logic to trigger sagemaker training jobs, prediction end points and prediction batch jobs.

8) The predictions can be validation using customised metrics depending upon the use case.

Advantages of this Framework

1) As mentioned in the introduction, the exploratory nature of machine learning (ML) and many analytics tasks means you need to rapidly ingest new datasets and clean, normalize, and feature engineer them without worrying about operational overhead when you have to think about the infrastructure that runs data pipelines. This framework can be easily maintained by data scientists without thinking about core ETL logic. All they need to do is to provide SQL queries and modify Control file. No need of thinking about infrastructure as Glue can be scaled up and down depending upon data size and need.

2) This is completely developed on serverless framework and hence provides greater scalability both vertically and horizontally, more flexibility, and quicker time to release, all at a reduced cost.

3) Spark framework deals with big data and hence can scale up to deal with petabyte scale of data.

4) This framework also uses SageMaker which is a great deal for most data scientists who would want to accomplish a truly end-to-end ML solution.

Conclusion

In this article, we demonstrated how you can create a machine learning workflow using Amazon serverless methods. You could also automate model retraining with Amazon CloudWatch Scheduled events. Since, you could be making batch predictions daily, you could prepare your data with AWS Glue, run batch predictions and chain the entire step together with this framework. You could alsoadd an SNS Step so that you get notified via email, slack or SMS on the status of your ML workflows.

Many possibilities…